Research

I'm a full-stack roboticist -- I believe robotics must be solved through progress in many different integral components. While scaling up data collection is important, we also need to design the right hardware (e.g, manipulators and contact sensors) and learning algorithms (e.g., learning from imbalanced sensor data) to enable robust policies on real robots for contact-rich tasks. To this end, my research includes:

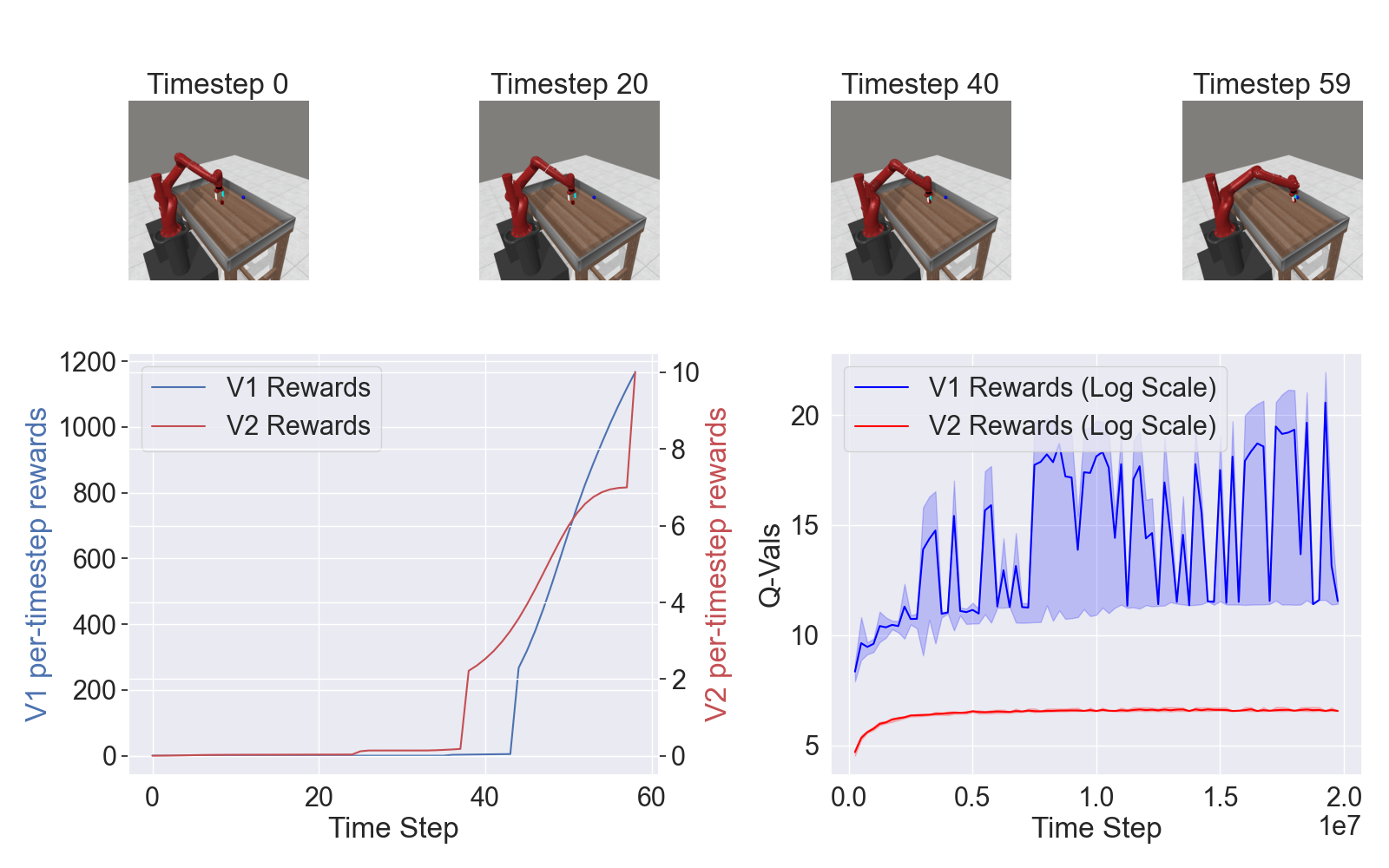

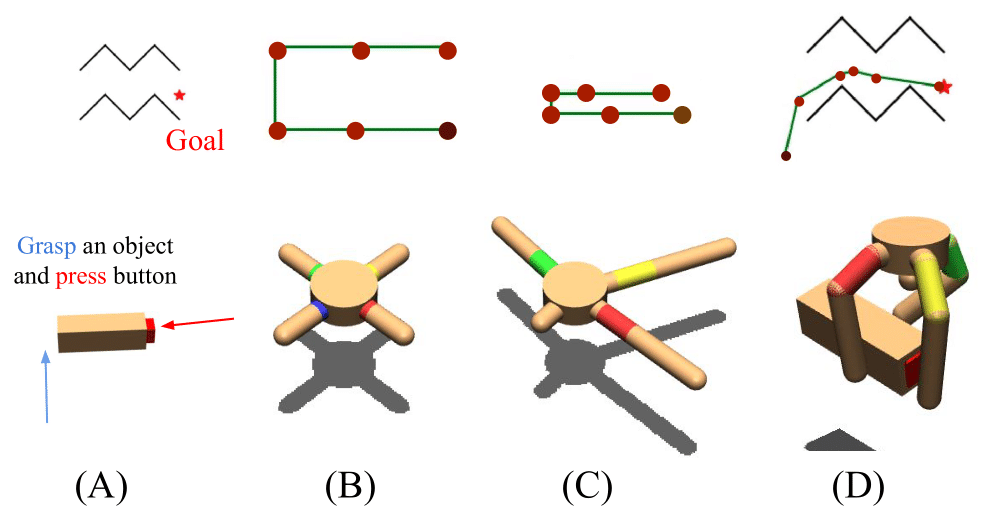

- Policy learning (e.g., imitation learning, reinforcement learning) for contact-rich tasks;

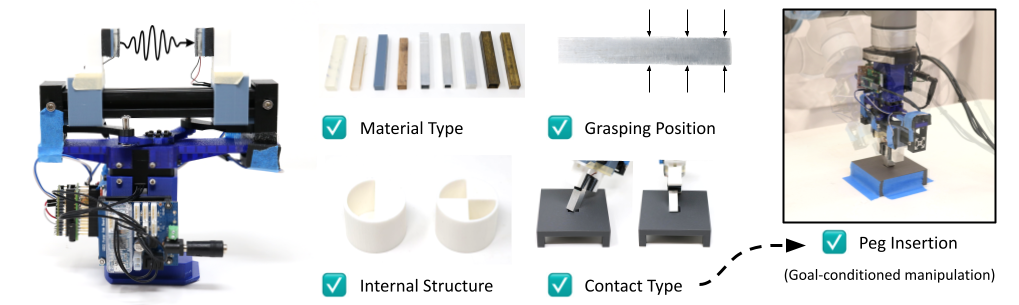

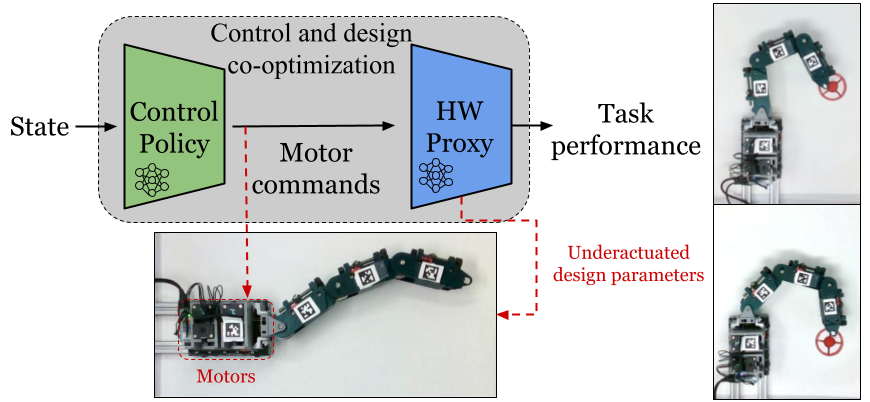

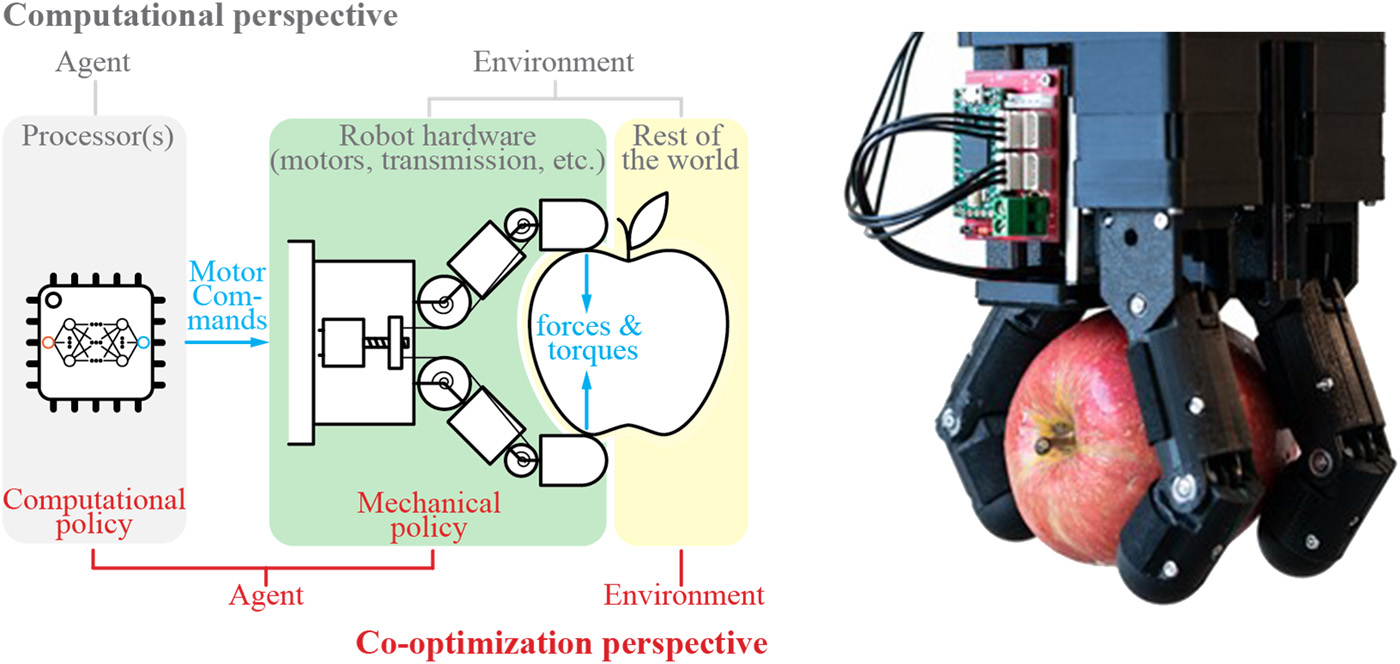

- Hardware design (e.g., manipulators, data collection systems, and contact sensors) and task-driven hardware optimization;

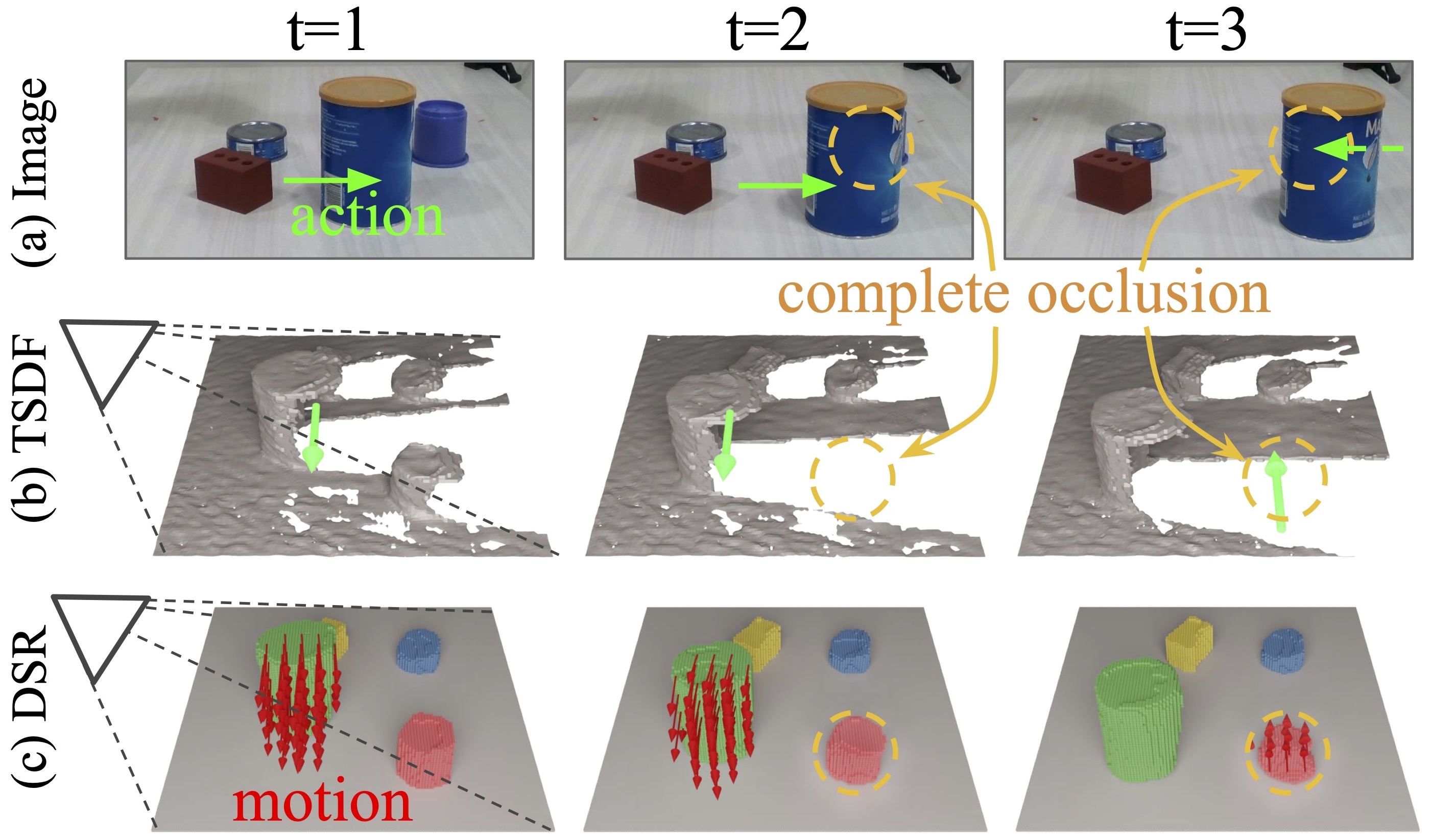

- Learning from multi-sensor and imbalanced data;

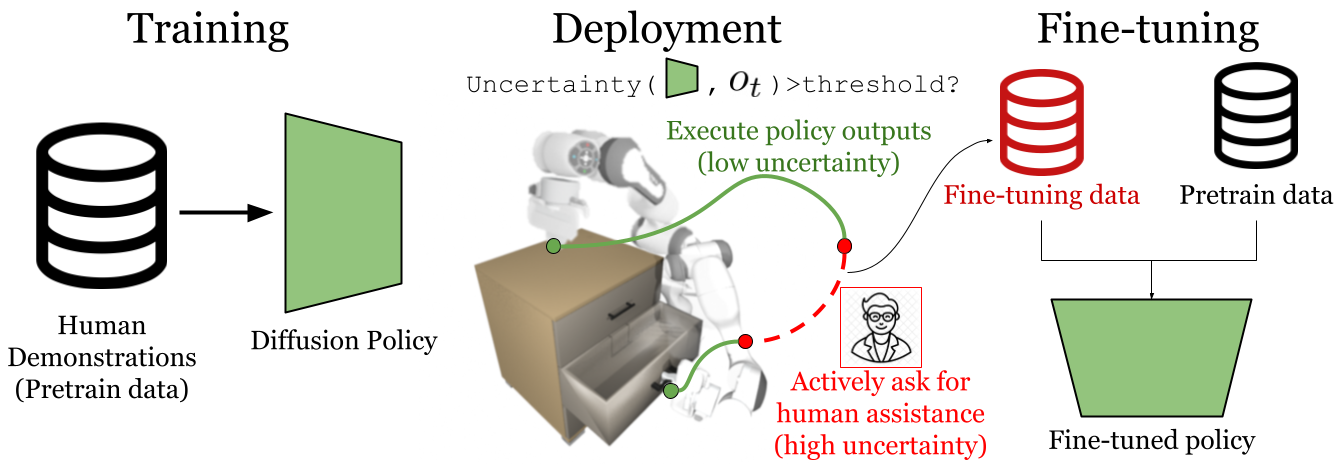

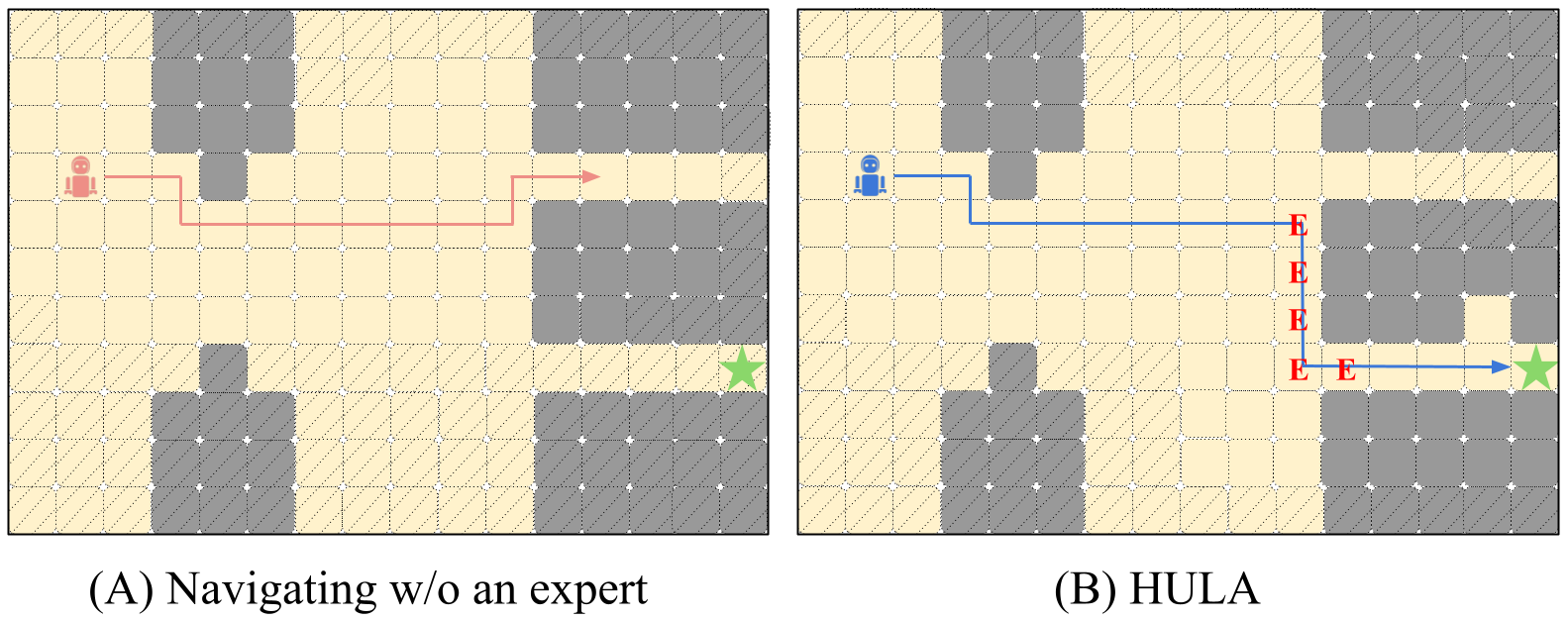

- Human-in-the-loop policy learning and deployment;

My full research statement will be available soon.

Publications (* - indicates equal contributions and joint-first authors)

Eric T. Chang*,

Peter Ballentine*,

Zhanpeng He*,

Do-gon Kim,

Kai Jiang,

Hua-Hsuan Liang,

Joaquin Palacios,

William Wang,

Ioannis Kymissis,

Matei Ciocarlie

Sharfin Islam*,

Zewen Chen*,

Zhanpeng He*,

Swapneel Bhatt,

Andres Permuy,

Brock Taylor,

James Vickery,

Zhengbin Lu,

Cheng Zhang,

Pedro Piacenza,

Matei Ciocarlie

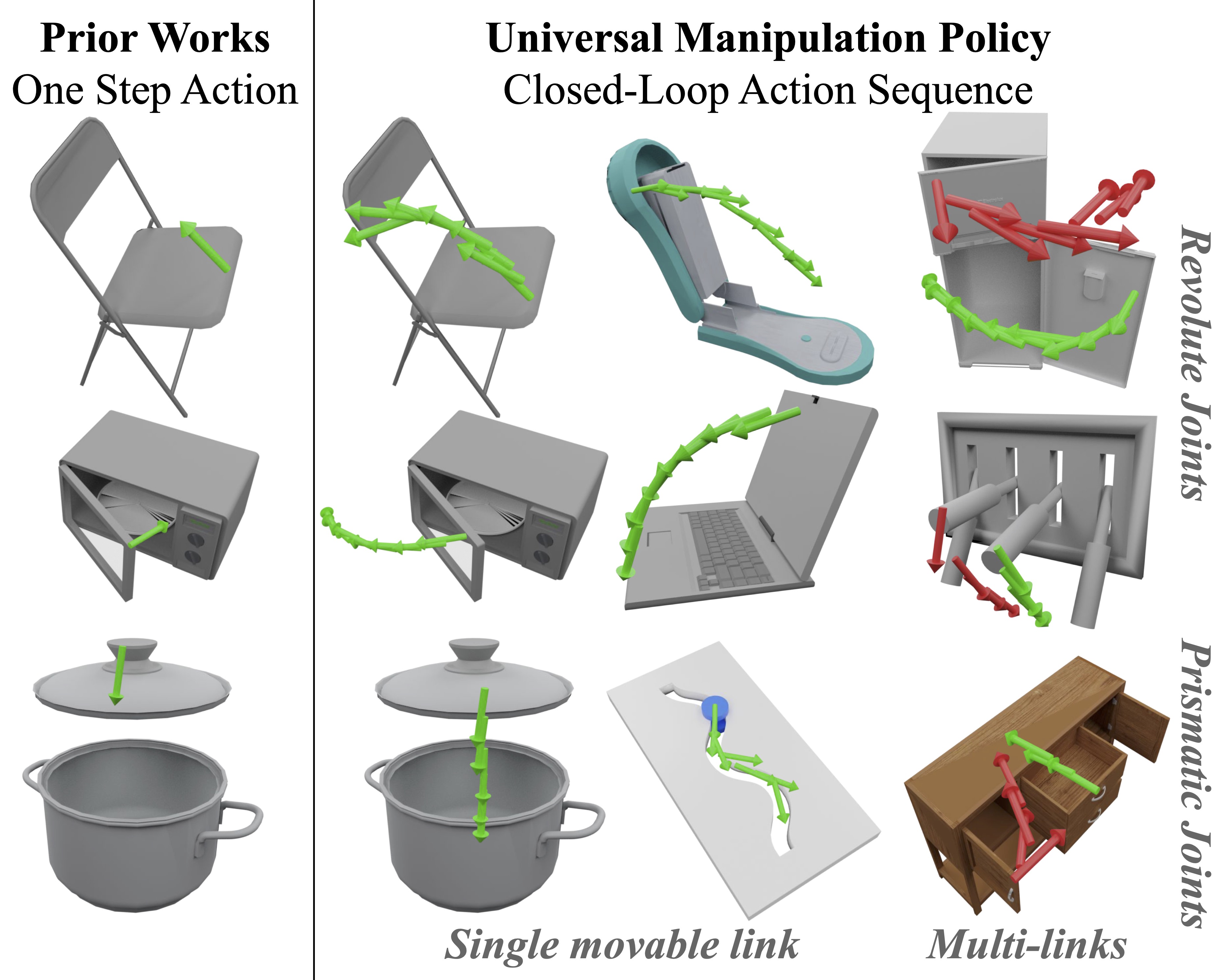

Zhanpeng He*,

Yifeng Cao*,

Matei Ciocarlie

Reginald McLean,

Evangelos Chatzaroulas,

Luc McCutcheon,

Frank Röder,

Tianhe Yu,

Zhanpeng He,

KR Zentner,

Ryan Julian,

JK Terry,

Isaac Woungang,

Nariman Farsad,

Pablo Samuel Castro

Do-Gon Kim*,

Kaidi Zhang*,

Hua-Hsuan Liang,

Eric T Chang,

Zhanpeng He,

Ioannis Kymissis,

Matei Ciocarlie

Sharfin Islam*,

Zhanpeng He*,

Matei Ciocarlie

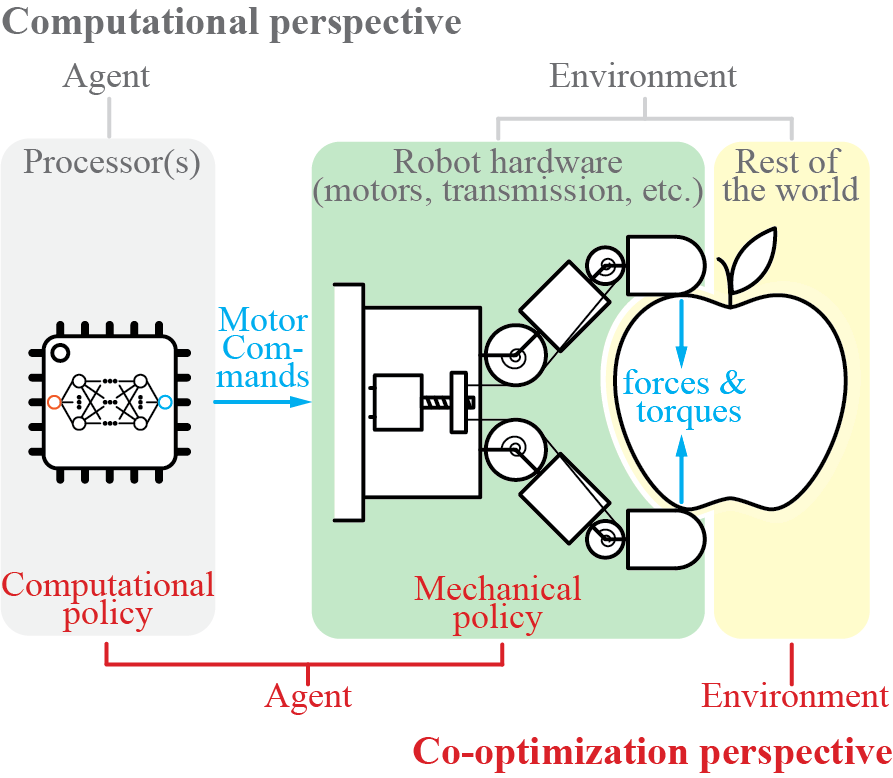

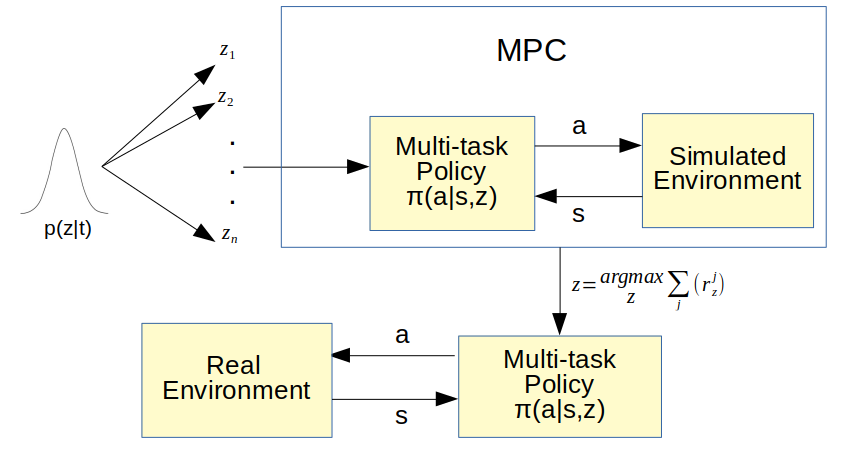

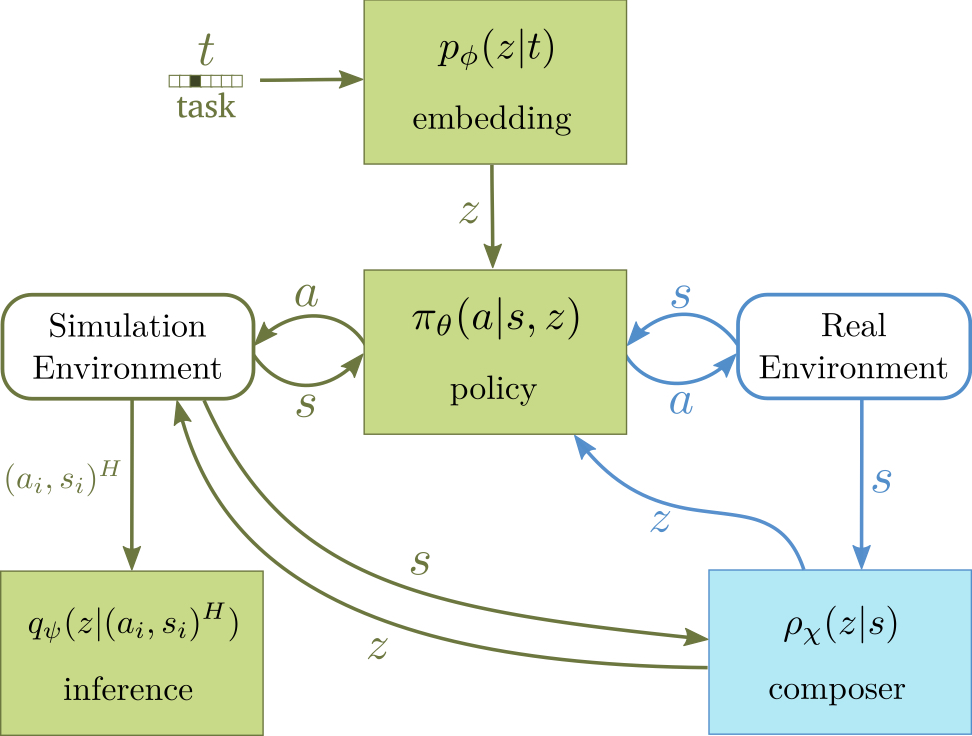

Zhanpeng He,

Matei Ciocarlie

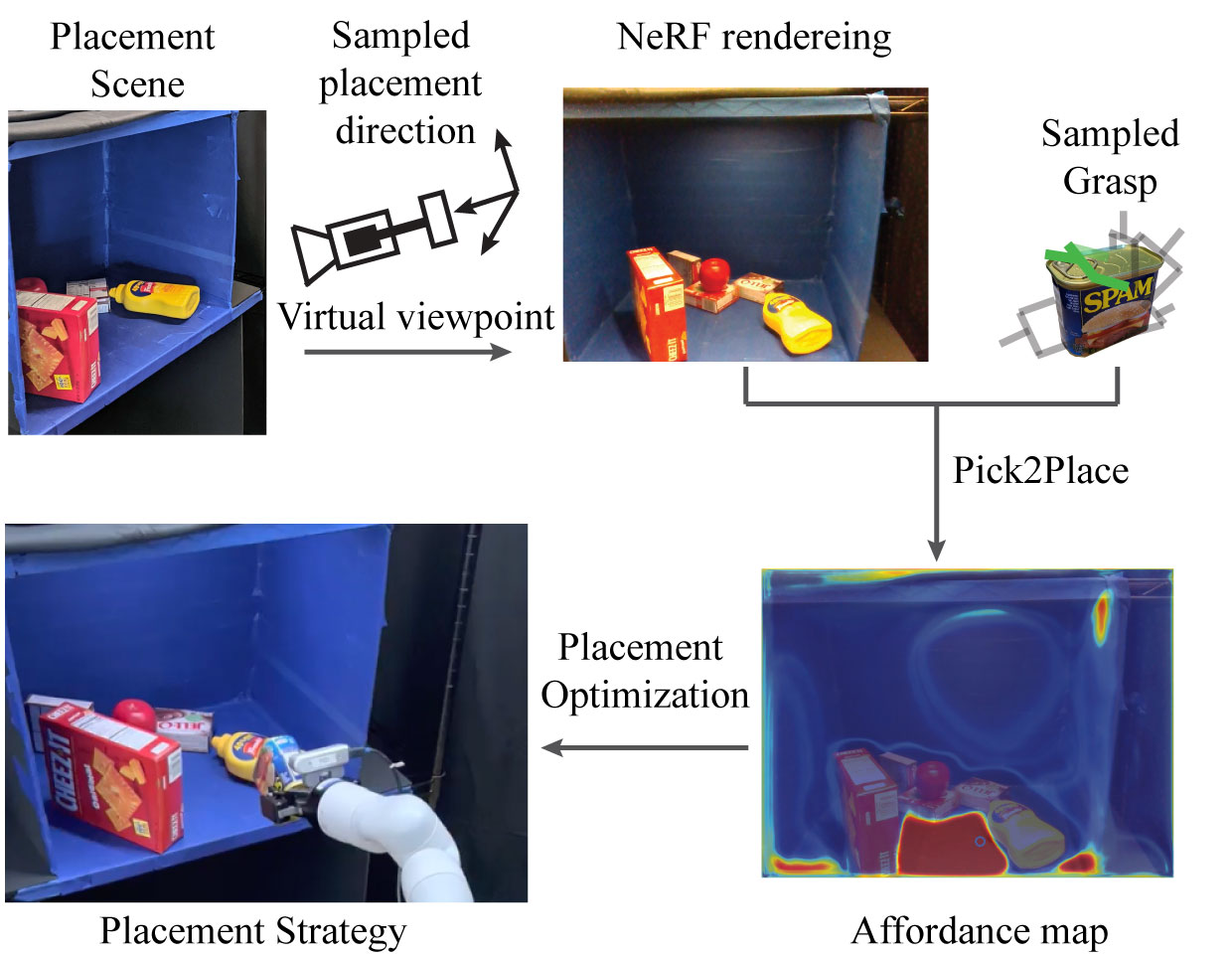

Siddharth Singi*,

Zhanpeng He*,

Alvin Pan,

Sandip Patel,

Gunnar A. Sigurdsson,

Robinson Piramuthu,

Shuran Song,

Matei Ciocarlie

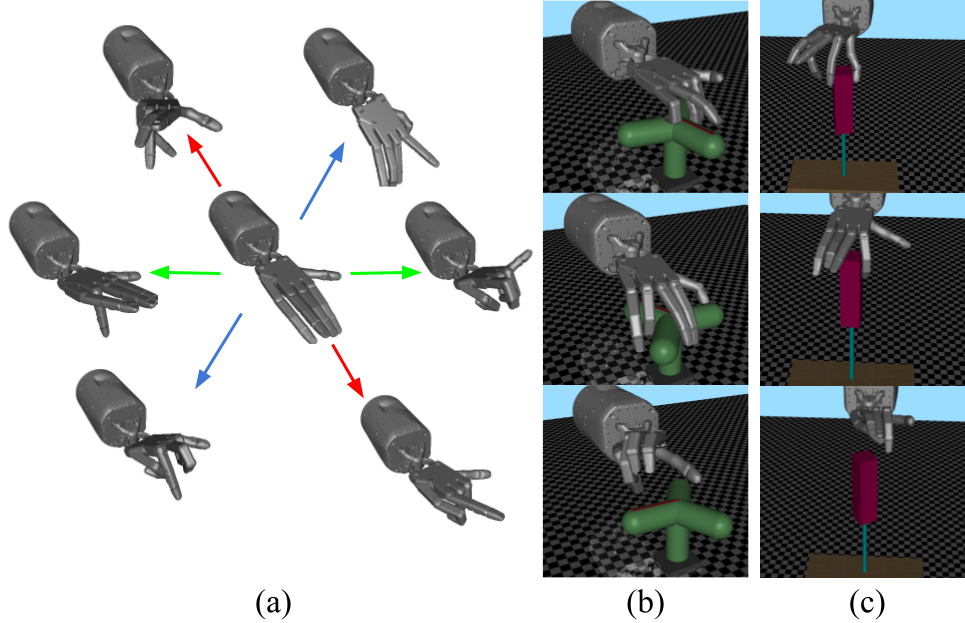

Zhanpeng He,

Nikhil Chavan-Dafle,

Jinwook Huh,

Shuran Song,

Volkan Isler

Software

I was a member of rlworkgroup and took part in development of several robot-learning-related open-source projects.

- Garage: A toolkit for reproducible reinforcement learning research.

- Dowel: A little logger for machine learning research.

- Meta-World: A collection of environments for benchmarking meta-learning and multi-task reinforcement learning algorithms.